electronics-journal.com

29

'21

Written on Modified on

Edge is the New Embedded

Cloud computing has delivered numerous advantages such as flexible working, including powerful and productive work from home – very important during the pandemic – and has democratised access to powerful, cutting-edge resources such as artificial intelligence (AI) and Big Data applications.

As the centre of the IoT, to which the many billions of IoT endpoints such as environmental sensors, industrial actuators, autonomous vehicles, and others ultimately report, the cloud is a critical enabler that lets individuals and businesses enjoy the benefits of a smarter world.

There are drawbacks, however. Maintaining an internet connection is energy-intensive and can be technically difficult or expensive for small devices. Networks can become congested with M2M traffic if every bit of data needs communicating back to the cloud. Moreover, relying on the cloud introduces latency that can impair service delivery and prevent IoT devices from acting deterministically in real-time. Also, transmitting data across the network and sharing it with cloud applications comes with privacy and security issues.

Tracing the Processing Effort

The compute power needed to process data and make decisions based on the results is moving out from the cloud and into the edge of the network. From the enterprise computing or carrier perspective, edge computing is associated with gateway devices at the periphery of the core network. This can be further subdivided into near edge infrastructure, typically hosting generic services, while applications become more specific at the far edge closer to end-users.

With increasing compute power, the edge is becoming the intelligent edge. But why stop there? Moving powerful computing further outwards to encompass the endpoints of the IoT, including sensors and actuators and data aggregators and gateways, is creating the embedded edge. Here, new solutions are emerging to help overcome the traditional constraints that face embedded designers, including power consumption, compute performance, memory footprint, and physical size.

What Does Edge Processing Bring?

Leveraging the gains delivered by successive generations of processors, which can handle more complex tasks and deliver faster performance while consuming less power, the embedded edge will continue to become more important as a pillar of IoT processing.

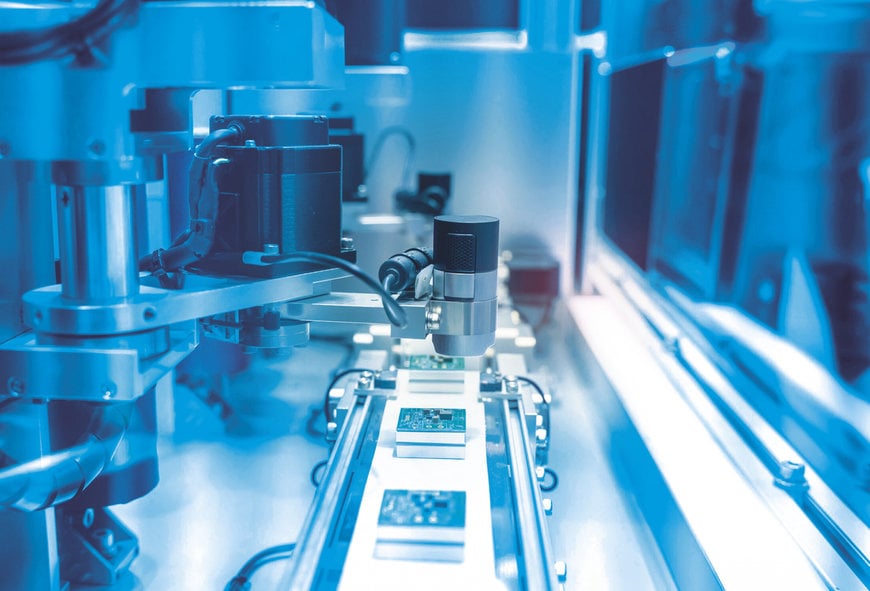

In particular, influential trends like Industry 4.0 and autonomous driving are bringing applications that demand low latency and network independence that edge processing can deliver. Examples include robot vision (Figure 1) and vehicle guidance. These can be further accelerated and improved by infusing machine-learning inference on embedded systems.

Machine learning can outperform conventional machine-vision applications and permit additional capabilities. An Autonomous Guided Vehicle (AGV) can go beyond simply detecting objects in its path by identifying and classifying those objects. This is an increasingly important capability as factory workspaces become more crowded, containing humans and mobile or static robots coexisting in close proximity.

Similarly, machine-learning techniques can enhance the efficiency of pattern recognition in industrial condition-monitoring systems to increase diagnostic accuracy. Other applications that can benefit from local machine-learning capability include smart agriculture, such as training image recognition to identify crop diseases autonomously without needing an Internet connection.

Machine Learning with TinyML

The concept of Tiny Machine Learning (TinyML) is emerging to realise these capabilities in embedded devices. TinyML describes machine-learning frameworks tailored to the needs of resource-constrained embedded systems. Developers need tools to build and train machine-learning models and then optimise them for deployment on the edge device, such as a microcontroller, small processor, or FPGA.

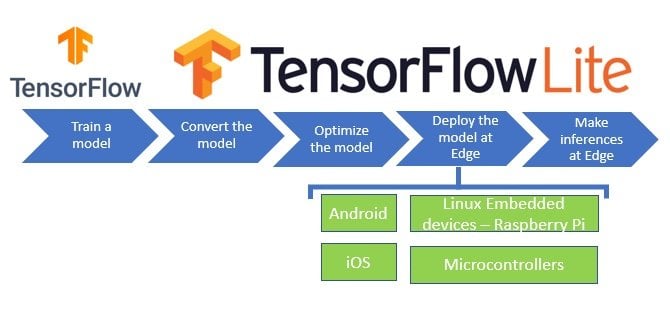

TinyML principles are embodied in edge-oriented machine-learning frameworks such as TensorFlow Lite (Figure 2). This popular and widely used framework comprises tools including a converter that optimises standard TensorFlow models to run on a target such as an embedded Linux device and an interpreter to run the optimised model.

Tensorflow Lite optimises machine-learning models for embedded deployment.

Moreover, TensorFlow Lite for Microcontrollers has been specifically created to run machine learning on devices that have extremely limited memory. The core runtime occupies only a few KBytes of memory and has been tested on many microcontrollers based on Arm® Cortex®-M cores. The TensorFlow Lite tools provide various ways to reduce the size of TensorFlow models to run on an embedded device or microcontroller.

Solutions for Inferencing at the Edge

Leading microcontroller vendors are now making available ecosystems and process flows for deploying AI inferencing and machine learning. You can now get embedded microcontrollers that are architected to allow the deployment of neural networks to run machine-learning algorithms.

New generations of microcontrollers are emerging, designed specifically with machine-learning inference in mind. TI Sitara processors such as the AM5729 provide one example. As well as two Arm Cortex-A15 cores and a Cortex-M4 embedded core, the AM5729 contains four Embedded Vision Engines (EVE) that can support deep learning networks to ensure high inferencing performance. The TI Deep Learning (TIDL) software framework and TIDL interface help developers build, train, and deploy neural networks on embedded processors.

STMicroelectronics’ toolbox for neural networks includes the STM32Cube.AI conversion tool to convert trained neural networks created using various popular frameworks. The tool automatically generates libraries optimised for ST's STM32 Arm Cortex-M microcontrollers. The AI ecosystem also provides software function packs that contain necessary low-level drivers and middleware libraries to deploy the trained neural network. There are also sample applications for audio-scene classification and human activity detection that help users quickly learn how to use embedded AI. A dedicated mobile app is provided, and ST’s SensorTile reference hardware to run inferences or data collection. The SensorTile is a turnkey board containing environmental and context sensors, all pre-integrated as a plug-and-play module.

Microchip offers machine-learning support for its microprocessors, FPGAs, and 32-bit microcontrollers such as the SAM D21 series. Their tools enable developers to use poplar machine-learning frameworks such as TensorFlow, Keras, and Caff, and TinyML frameworks like TensorFlow Lite. When working with microcontrollers or microprocessors, using the MPLAB® toolset, developers can take advantage of tools such as the ML Plugin and MPLAB Data Visualizer to capture data for training neural networks using tools by Microchip partners. These include Cartesiam Nano Edge AI Studio, which automatically searches for AI models, helps analyse sensor data and generates libraries, and Edge Impulse Studio with the Edge Impulse Inferencing SDK C++ library that utilises TensorFlow Lite for Microcontrollers. Users can deploy their projects on their chosen MCU using the Microchip MPLAB X IDE.

Renesas’ embedded Artificial Intelligence (e-AI) platform comprises several concepts that help implement AI in endpoint devices. The Renesas RZ/A2M microprocessors feature Dynamically Reconfigurable Processor (DRP) technology, which combines the performance of a hardware accelerator with the flexibility of a CPU to allow high-speed, low-power machine vision. The platform also provides tools, including the e-AI Translator. This converts and imports the inference processing of trained neural network models into source code files that can be used within an e² studio IDE C/C++ project. The neural networks can be trained using an open-source deep learning framework like TensorFlow.

Also, makers, young engineers, and professionals alike can now experiment with building smart devices using Google AIY do-it-yourself AI kits and the Google Coral local AI platform. AIY kits include an intelligent camera that comprises a Raspberry Pi board and camera, a Vision bonnet, necessary cables and push buttons, and a simple cardboard enclosure that lets users quickly learn about image recognition. A similar intelligent speaker kit helps investigate voice recognition.

Google Coral provides a choice of hardware, including a development board, a mini development board, and a USB accelerator that offers a co-processor for users to "plugin" AI to existing products. The toolkit supports TensorFlow Lite, and all boards contain Google's Edge TPU, a tensor processing unit related to Google Cloud TPU that is purpose-designed with a small footprint and low power.

Conclusion: The Future of the Edge is Embedded Intelligence

Raising the computing power of devices at the network edge helps ensure a reliable, high-performing, and privacy-conscious IoT. Equipment in various locations, ranging from network gateways and aggregators to IoT endpoints, can be regarded as edge devices. Increasingly, artificial intelligence is needed to meet demands for performance and efficiency, including machine learning solutions for deployment on microcontrollers. These comprise open-source TinyML frameworks and optimised, accelerated microcontroller architectures from leading manufacturers. A variety of tools, platforms and plug-and-play kits are available to help developers explore the possibilities, whether a beginner or seasoned professional.

www.mouser.com