electronics-journal.com

08

'26

Written on Modified on

AI Validation Framework for Safety-Critical Systems

Keysight Technologies introduces a lifecycle-based software platform to analyze, validate, and monitor AI behavior in regulated environments such as automotive and industrial systems.

www.keysight.com

Keysight Technologies has introduced AI Software Integrity Builder, a software solution designed to support the validation, assurance, and long-term maintenance of AI-enabled systems used in safety-critical applications. The platform addresses growing regulatory and engineering challenges as artificial intelligence becomes embedded in automotive, industrial, and other high-risk domains.

Why AI assurance has become a technical bottleneck

AI models increasingly influence real-time decisions in vehicles and industrial systems, yet their internal logic often remains difficult to interpret. This creates challenges for developers who must demonstrate predictable behavior, robustness, and compliance with emerging regulations. In the automotive sector, standards such as ISO/PAS 8800 and regulatory frameworks like the EU AI Act require explainability, traceability, and evidence of safe behavior across the AI lifecycle, without prescribing specific validation methodologies.

At the same time, AI development workflows are typically fragmented. Separate tools are used for dataset preparation, model training, testing, and deployment monitoring, making it difficult to maintain a continuous chain of evidence from development through operation. These gaps increase the risk of undetected bias, performance degradation, or non-conformance in deployed systems.

A lifecycle approach to AI system integrity

Keysight AI Software Integrity Builder is designed as a unified framework that spans the full AI lifecycle, from data analysis to in-field monitoring. Rather than focusing on a single phase of development, the software provides continuous visibility into how an AI system is trained, how it makes decisions, and how it behaves once deployed.

The platform is intended to help engineering teams answer a central technical question: how an AI system behaves internally and whether that behavior remains within defined safety and performance boundaries under real-world conditions. This approach supports both pre-deployment validation and post-deployment maintenance, which is increasingly required for adaptive or continuously updated AI models.

Dataset and model transparency

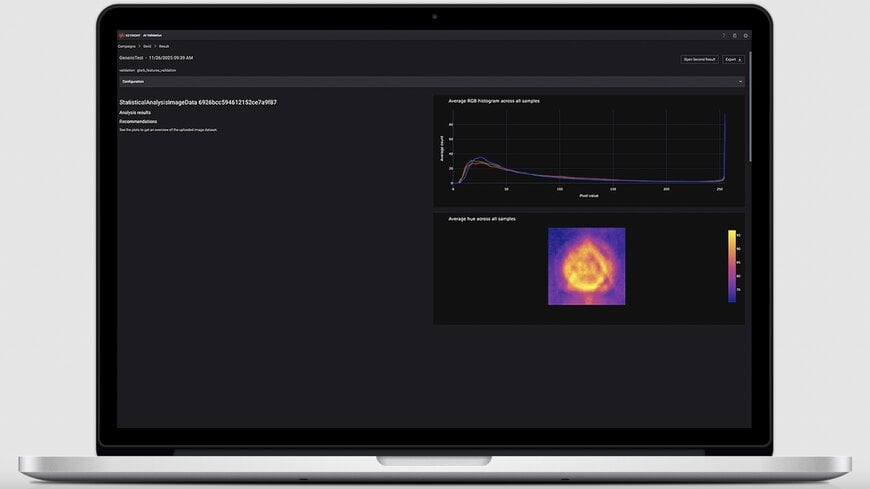

One core function of the solution is dataset analysis. Statistical methods are used to evaluate training and validation data for bias, coverage gaps, and inconsistencies that could affect downstream model behavior. By quantifying data quality, engineers can identify limitations that may not be apparent through accuracy metrics alone.

Model-based validation tools are then used to analyze how trained models arrive at specific decisions. This includes exposing correlations and decision patterns that may indicate overfitting, unintended dependencies, or sensitivity to specific input features. Such explainability is critical for meeting regulatory expectations in automotive and other safety-critical sectors.

Inference testing and operational monitoring

Beyond training and validation, the platform emphasizes inference-based testing, evaluating how AI models behave when exposed to real-world inputs that differ from training conditions. This helps detect behavioral drift, edge cases, or performance degradation that may emerge during operation.

By linking inference results back to dataset and model characteristics, the system supports iterative improvement of AI models. This closed-loop process is particularly relevant for applications such as automated driving, where models must remain reliable across diverse environments and over long deployment lifecycles.

Positioning in the AI assurance landscape

While many open-source and commercial tools address isolated aspects of AI development, such as model explainability or dataset analysis, they rarely provide an integrated view across the full lifecycle. Keysight’s approach focuses on connecting these phases to create a continuous assurance framework, aligning technical validation activities with regulatory and safety requirements.

For industries deploying AI in safety-critical systems, the software is positioned as a means to reduce compliance risk, improve transparency, and maintain control over AI behavior as systems evolve in the field.

www.keysight.com