Intel Unveils Infrastructure Processing Unit

Intel’s IPU improves data center efficiency and manageability and is the only one built in collaboration with hyperscale cloud partners.

What’s New: Today during the Six Five Summit, Intel unveiled its vision for the infrastructure processing unit (IPU), a programmable networking device designed to enable cloud and communication service providers to reduce overhead and free up performance for central processing units (CPUs). With an IPU, customers will better utilize resources with a secure, programmable, stable solution that enables them to balance processing and storage.

“The IPU is a new category of technologies and is one of the strategic pillars of our cloud strategy. It expands upon our SmartNIC capabilities and is designed to address the complexity and inefficiencies in the modern data center. At Intel, we are dedicated to creating solutions and innovating alongside our customer and partners — the IPU exemplifies this collaboration.”

–Guido Appenzeller, chief technology officer, Data Platforms Group, Intel

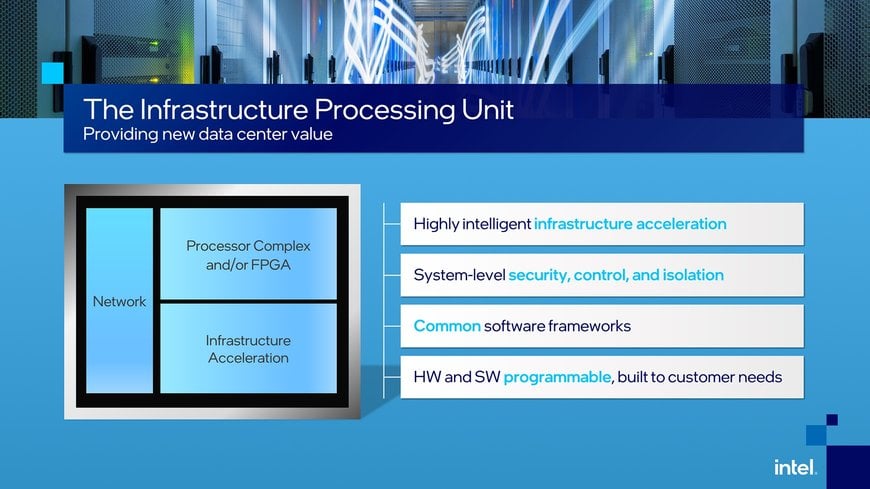

How It Works: The IPU is a programmable network device that intelligently manages system-level infrastructure resources by securely accelerating those functions in a data center.

It allows cloud operators to shift to a fully virtualized storage and network architecture while maintaining high performance and predictability, as well as a high degree of control.

The IPU has dedicated functionality to accelerate modern applications that are built using a microservice-based architecture in the data center. Research from Google and Facebook has shown 22%1 to 80%2 of CPU cycles can be consumed by microservices communication overhead.

With the IPU, a cloud provider can securely manage infrastructure functions while enabling its customer to entirely control the functions of the CPU and system memory.

An IPU offers the ability to:

- Accelerate infrastructure functions, including storage virtualization, network virtualization and security with dedicated protocol accelerators.

- Free up CPU cores by shifting storage and network virtualization functions that were previously done in software on the CPU to the IPU.

- Improve data center utilization by allowing for flexible workload placement.

- Enable cloud service providers to customize infrastructure function deployments at the speed of software.

"As a result of Intel’s collaboration with a majority of hyperscalers, Intel is already the volume leader in the IPU market with our Xeon-D, FPGA and Ethernet components,” said Patty Kummrow, vice president in the Data Platforms Group and general manager of Ethernet Products Group at Intel. “The first of Intel’s FPGA-based IPU platforms are deployed at multiple cloud service providers and our first ASIC IPU is under test.”

Andrew Putnam, principal Hardware Engineering manager at Microsoft, said: “Since before 2015, Microsoft pioneered the use of reconfigurable SmartNICs across multiple Intel server generations to offload and accelerate networking and storage stacks through services like Azure Accelerated Networking. The SmartNIC enables us to free up processing cores, scale to much higher bandwidths and storage IOPS, add new capabilities after deployment, and provide predictable performance to our cloud customers. Intel has been our trusted partner since the beginning, and we are pleased to see Intel continue to promote a strong industry vision for the data center of the future with the infrastructure processing unit.”

What’s Next: Intel will roll out additional FPGA-based IPU platforms and dedicated ASICs. These solutions will be enabled by a powerful software foundation that allows customers to build leading-edge cloud orchestration software.

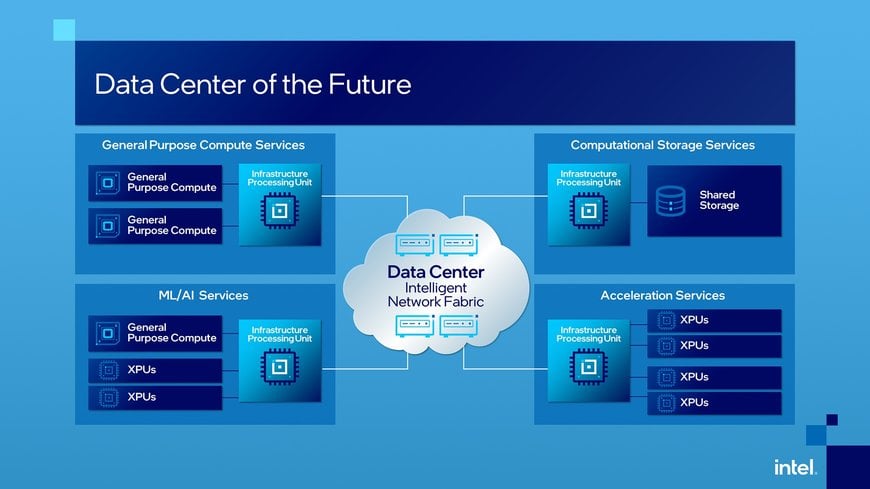

Why It Matters: Evolving data centers will require a new intelligent architecture where large-scale distributed heterogeneous compute systems work together and is connected seamlessly to appear as a single compute platform. This new architecture will help resolve today’s challenges of stranded resources, congested data flows and incompatible platform security. This intelligent data center architecture will have three categories of compute — CPU for general-purpose compute, XPU for application-specific or workload-specific acceleration, and IPU for infrastructure acceleration — that will be connected through programmable networks to efficiently utilize data center resources.

www.intel.com