Leading research delivers AI firsts

At Qualcomm, we have a culture of innovation. We take pride in researching and developing fundamental technologies that will change the world. It’s also in our DNA to prototype these ideas and ensure that they can be deployable and scalable under real-world conditions. We have a saying that if you haven't tested it, it doesn't work — so we seed ideas, build prototype systems, and seeing if they are worth the effort to commercialize.

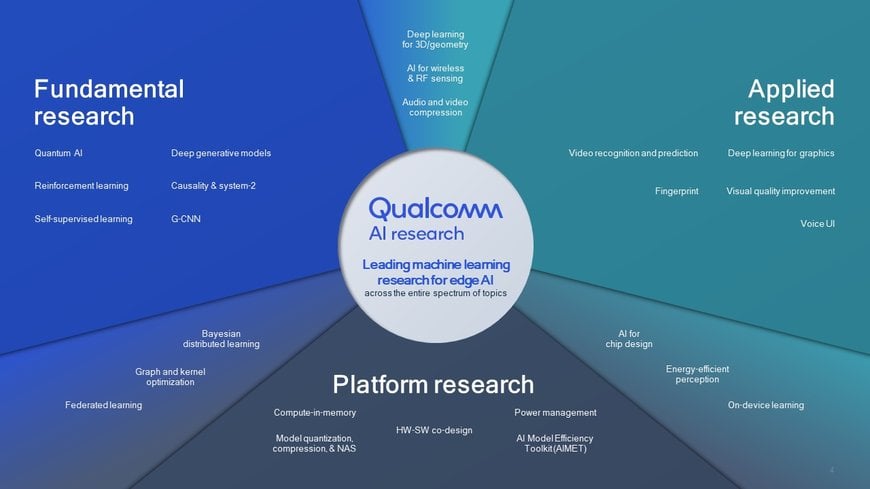

We cover the entire spectrum of AI research and development.

Qualcomm AI Research establishes firsts in AI across research and proof-of-concept. Accelerating the pipeline from AI research to commercialization has been daunting because deploying the technology in the real world faces many challenges beyond a lab setting where theoretical work is developed. Qualcomm AI Research has taken on the task of not only generating novel AI research but also being first to demonstrate proof-of-concepts on commercial devices, paving the path for technology to scale in the real world. In this blog post, I’ll cover both our novel AI research and our full stack AI research that has enabled first-ever proof-of-concept demonstrations on commercial mobile devices.

Innovating across the entire spectrum of AI

At Qualcomm AI Research, we do novel research that covers the entire spectrum of AI R&D to push the boundaries of what is possible with machine learning. Our research directions can be classified into three areas:

- Fundamental research looks the furthest into the future and is generally more foundational. Examples include Group-equivariant CNNs, or G-CNNs, and quantum AI.

- Platform research focuses on achieving the best power, performance, and latency when running AI models on hardware by employing techniques, such as quantization and compression to models, as well as enhancing compiler algorithms.

- Applied research is where we leverage both fundamental and platform advances in AI to achieve state-of-the-art in specific use cases, like video recognition and prediction.

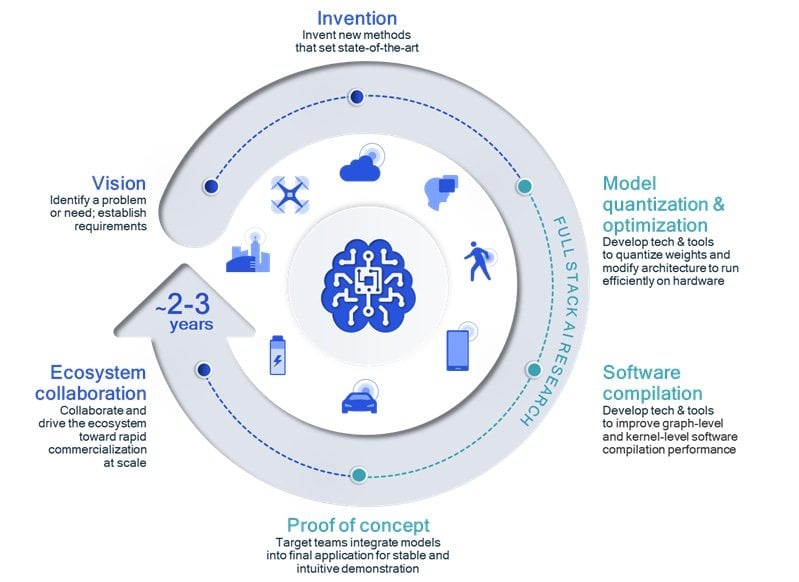

Deploying our innovations through full stack AI research

To bring these innovations to the masses, we need to dive deeper into full-stack AI research. This means researching optimization techniques across the application, the neural network model, the algorithms, the software, and the hardware, as well as working across disciplines within the company (and sometimes partnering with other companies). There are many layers of hardware and software that can be tuned and optimized to squeeze out every bit of performance at the lowest energy consumption. Once we have done full-stack optimization and proven out the technology, we can enable the ecosystem toward rapid commercialization at scale.

Our full stack research is essential to making fundamental research deployable.

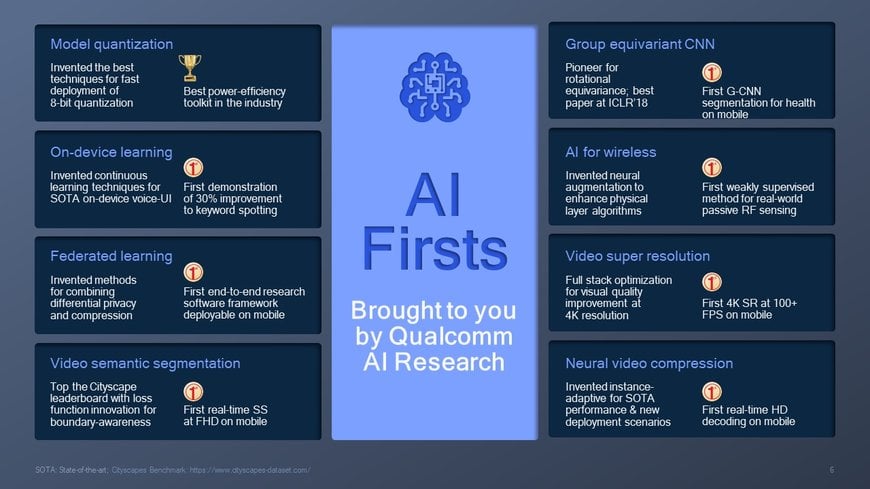

Driving AI firsts in research and proof-of-concepts

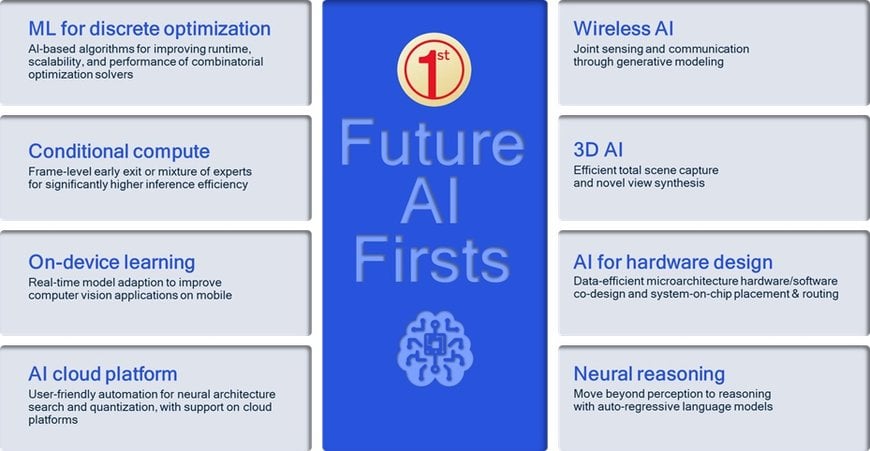

Our purposeful innovation has led to many AI firsts – these are firsts both in novel research and in proof-of-concepts running on target devices due to our full-stack optimizations.

Our AI firsts in both research and proof-of-concept.

I’ll briefly describe a few examples, but please tune into my webinar and download the presentation for a more detailed explanation across all other examples.

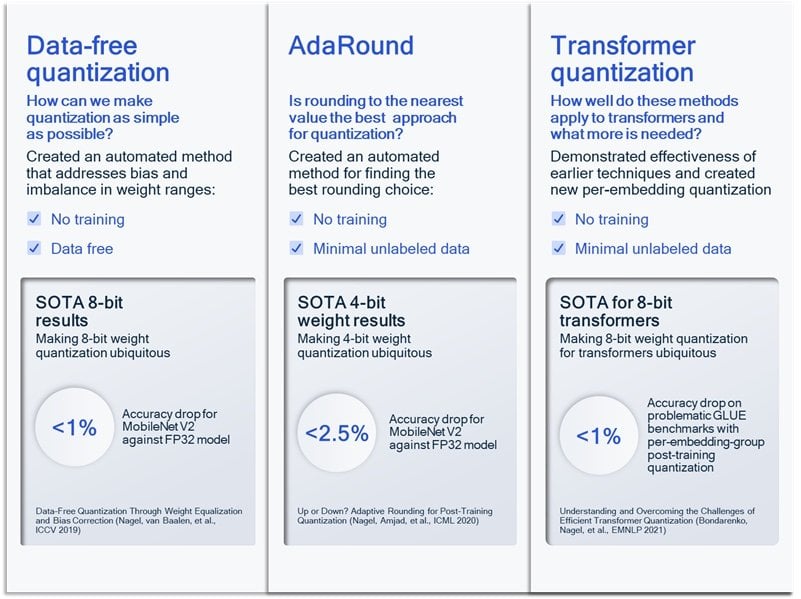

Model quantization

Although AI is bringing tremendous new capabilities as models sizes increase, it often comes at the cost of increased computation, energy consumption, and latency. Quantization reduces the precision of weight parameters and activation node computations for an AI model. If you can maintain model accuracy, then quantization offers compelling benefits, such as improved power efficiency, increased performance, and lower memory usage.

We’ve invented the best techniques for fast deployment of 8-bit quantization while maintaining model accuracy, such as data-free quantization, AdaRound, and transformer quantization. We’re also driving the industry toward integer inference by open sourcing the research through the best power efficiency toolkit in the industry, the AI Model Efficiency Toolkit (AIMET).

We’ve invented the best techniques for fast deployment of 8-bit quantization.

Federated learning

The need for intelligent, personalized experiences powered by AI is ever-growing. Devices are producing more and more data that could help improve our AI experiences. However, how can we preserve privacy while taking advantage of this insightful data? Federated learning addresses this through on-device learning and only aggregating model updates in a central location. To improve federated learning, we’ve invented methods for combining differential privacy and compression. Our Differentially Private Relative Entropy Coding (DP-REC) method preserves privacy and enables high compression for a drastic reduction in communications. We also demonstrated the first federated learning end-to-end research software framework for the mobile ecosystem, showing a voice user verification example.

Neural video compression

The demand for improved data compression continues to grow since the scale of video being created and consumed is massive. AI-based compression has compelling benefits over conventional algorithms and codecs. We invented an instance-adaptive compression method that can customize the codec for the specific video rather than using a generic codec. This research achieved state-of-the art results, including a 24 percent BD-rate savings over the leading neural codec by Google. We also demonstrated the first HD neural video decoder on mobile. Through full stack research, like a novel parallel entropy decoding algorithm, quantization, and hardware optimization, we can run the neural decoders in real time for HD videos.

Super resolution

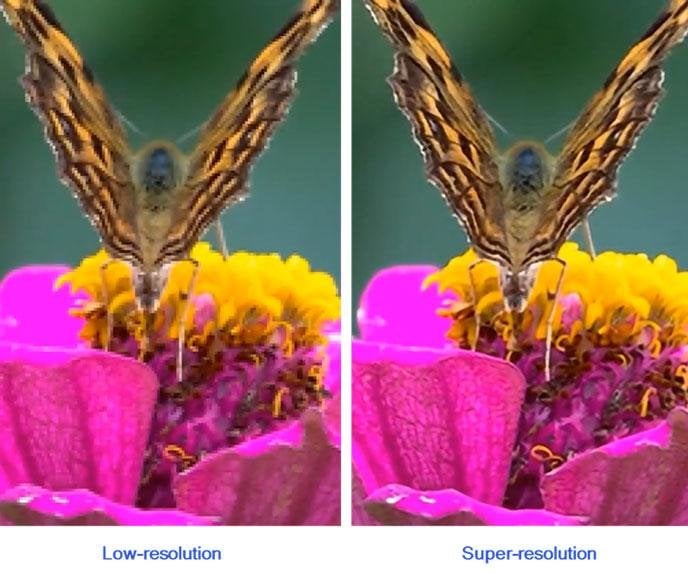

Super resolution creates a high-resolution image with superior visual quality from a low-resolution image. This capability is very useful across many applications, from gaming and photography to XR and autonomous driving. AI-based super resolution offers improved visual quality over traditional methods. Until now, on-device deployment that meets real-time performance, latency, and power requirements has not been feasible. With our full-stack optimization, which included a hardware-optimized custom model architecture and quantization, we achieved the first 4K super resolution at 100+ frames per second on a mobile device.

2X super resolution running on a mobile device at more than 100 frames per second.

AI for wireless

AI applied to wireless can offer many enhanced or new capabilities, such as improved communications or radio frequency (RF) sensing. Neural networks can be used to augment traditional algorithms in wireless communications, thereby achieving increased performance – we call this neural augmentation. We can combine the inductive bias from wireless domain knowledge with neural networks to address interpretability, out-of-domain generalization, and achieve better sample complexity. We invented neural augmentation to enhance physical layer algorithms. Two examples of neural augmentation are hypernetwork Kalman filtering and generative channel modeling. For RF sensing, we showcased the first weakly supervised method for real-world passive RF sensing at MWC 2021 and NeurIPS 2021. Our new machine-learning based method works on large floor plans and only requires weakly labeled training data and a floor plan.

Innovating for our next AI firsts

Our purposeful innovation is continuing, and we expect many more AI firsts across a diverse range of topics as we work on novel research and full stack optimizations.

Our innovation cycle is continuing, as well as our next potential AI future AI firsts.

We will continue to solve system and feasibility challenges with full-stack optimizations to quickly move our research toward commercialization. If you are excited about solving big problems with cutting-edge AI technology and improving the lives of billions of people, come join our team.

www.qualcomm.com