electronics-journal.com

07

'26

Written on Modified on

SK hynix Unveils Next-Generation AI Memory at CES 2026

From January 6–9, in Las Vegas, SK hynix highlights expanded AI memory portfolio with new HBM4, server-oriented modules, and NAND flash solutions to support data center and AI system demands.

www.skhynix.com

At the CES 2026 conference, SK hynix Inc. presented an expanded suite of next-generation memory technologies engineered for artificial intelligence workloads and large-scale data systems. The company’s showcase focused on high-bandwidth memory (HBM) advancements, AI-optimized memory modules, and high-capacity NAND flash products relevant to data center and AI ecosystem integration.

New Memory Architectures for AI Workloads

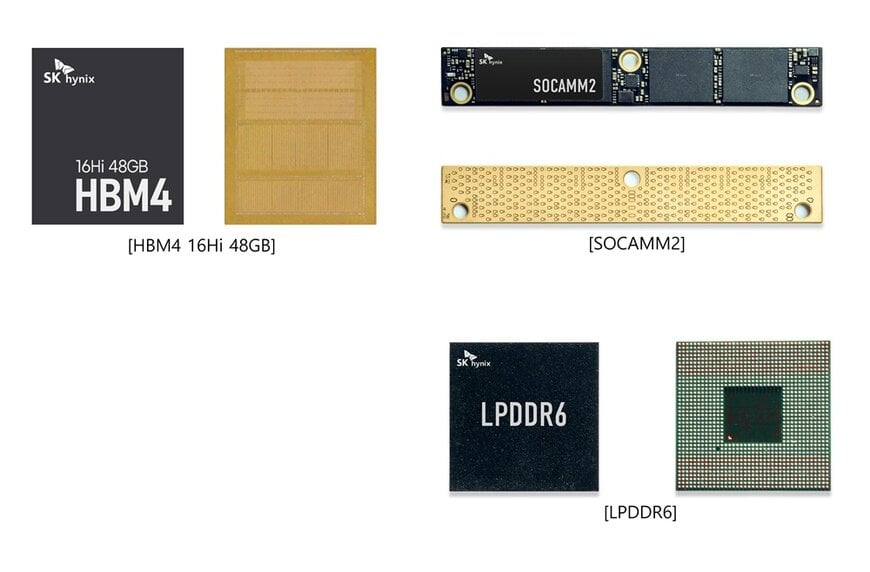

SK hynix introduced its next-generation 16-layer HBM4 memory with 48 GB capacity at the event, marking its first public demonstration of this configuration. The 16-layer variant is the successor to the 12-layer HBM4 with 36 GB — itself noted for achieving industry-leading speeds of approximately 11.7 Gbps — and is under active development in collaboration with customers. The expanded stack increases memory capacity and bandwidth to meet the data throughput requirements of advanced AI accelerators and high-performance computing platforms.

Alongside HBM4, the company also displayed the 12-layer HBM3E product with 36 GB, which is scheduled to be a market driver for the current year and is shown integrated into GPU modules aimed at AI servers. These high-bandwidth memory solutions target workloads in large-scale training and inference systems where sustained data transfer rates are critical.

Memory Modules and System Integration

To address AI server efficiency, SK hynix presented SOCAMM2, a low-power memory module optimized for AI server environments. These modules are designed to balance performance with energy efficiency, a growing priority in data center architectures where operating costs and heat dissipation significantly affect total cost of ownership.

The company also highlighted LPDDR6, a next-generation low-power DRAM standard optimized for on-device AI tasks. Relative to its predecessors, LPDDR6 aims to offer improved processing speeds and lower power consumption, characteristics critical for mobile and embedded AI platforms.

NAND Flash and High-Capacity Storage

In response to the expanding storage needs of AI data centers, SK hynix showcased a 321-layer 2 Tb QLC NAND flash product tailored for ultra-high-capacity enterprise SSDs (eSSDs). This NAND technology features improvements in power efficiency and performance over prior generations, addressing the increasing demand for bulk storage in data-intensive AI workflows.

Demonstrating System-Level AI Memory Integration

The exhibition included an “AI System Demo Zone” to illustrate how diverse memory solutions interconnect within broader AI system architectures. Demonstrations featured custom HBM (cHBM) designs tailored to specific AI chips or systems, processing-in-memory (PIM) concepts that integrate computation directly into memory arrays, compute-using-DRAM (CuD) mechanisms, and CXL Memory Module-Accelerator xPU (CMM-Ax) configurations that combine the bandwidth benefits of Compute Express Link (CXL) interfaces with computational capabilities at the memory layer. These approaches aim to mitigate data movement bottlenecks and improve system efficiency for large-scale AI models.

Relevance for Data Center and AI Ecosystem

The technologies showcased by SK hynix at CES 2026 reflect ongoing industry trends toward more tightly integrated memory hierarchies in AI systems, where both capacity and bandwidth constraints directly impact training and inference performance. The expanded memory portfolio supports a range of deployment scenarios — from server-class AI accelerators to mobile AI devices — contributing to the broader automotive data ecosystem and other sectors where real-time processing and large model datasets are increasingly prevalent.

While specific commercial availability timelines were not disclosed in detail, the demonstrations underscore SK hynix’s focus on providing scalable memory solutions across evolving AI infrastructure requirements.

www.skhynix.com